Neural network architecture overview

Table of Contents

An overview of the main types of neural network architecture

An overview of the main types of neural network architecture

Feed-forward neural networks

Figure 1: Feed-forward neural network

A feedforward neural network is an artificial neural network wherein connections between the units do not form a cycle. As such, it is different from recurrent neural networks.

The feedforward neural network was the first and simplest type of artificial neural network devised. In this network, the information moves in only one direction, forward, from the input nodes, through the hidden nodes (if any) and to the output nodes. There are no cycles or loops in the network.

These are the commonest type of neural network in practical applications.

- The first layer is the input and the last layer is the output.

- If there is more than one hidden layer, we call them “deep” neural networks.

They compute a series of transformations that change the similarities between cases.

- The activities of the neurons in each layer are a non-linear function of the activities in the layer below.

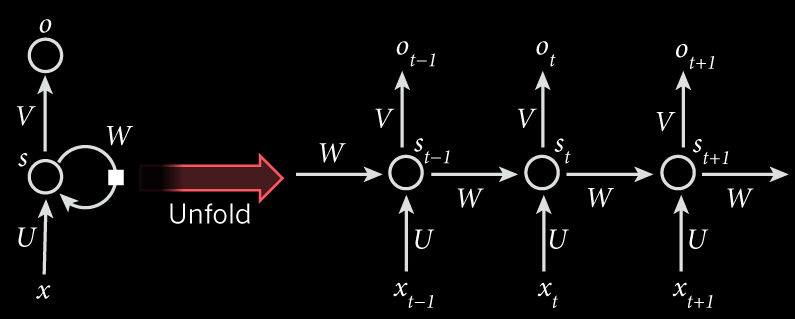

Recurrent networks

Figure 2: Recurrent network

A recurrent neural network (RNN) is a class of artificial neural network where connections between units form a directed cycle. This creates an internal state of the network which allows it to exhibit dynamic temporal behavior. Unlike feedforward neural networks, RNNs can use their internal memory to process arbitrary sequences of inputs. This makes them applicable to tasks such as unsegmented connected handwriting recognition or speech recognition.

These have directed cycles in their connection graph.

- That means you can sometimes get back to where you started by following the arrows. They can have complicated dynamics and this can make them very difficult to train.

- There is a lot of interest at present in finding efficient ways of training recurrent nets. They are more biologically realistic.

Recurrent nets with multiple hidden layers are just a special case that has some of the hidden → hidden connections missing.

Recurrent neural networks for modeling sequences

Figure 3: RNN unfold view

Recurrent neural networks are a very natural way to model sequential data:

- They are equivalent to very deep nets with one hidden layer per time slice.

- Except that they use the same weights at every time slice and they get input at every time slice.

They have the ability to remember information in their hidden state for a long time.

- But its very hard to train them to use this potential.

An example of what recurrent neural nets can now do

(to whet your interest!)

Ilya Sutskever (2011) trained a special type of recurrent neural net to predict the next character in a sequence.

After training for a long time on a string of half a billion characters from English Wikipedia, he got it to generate new text.

- It generates by predicting the probability distribution for the next character and then sampling a character from this distribution.

- The next slide shows an example of the kind of text it generates.

Notice how much it knows!

Some text generated one character at a time

by Ilya Sutskever’s recurrent neural network

In 1974 Northern Denver had been overshadowed by CNL, and several Irish intelligence agencies in the Mediterranean region. However, on the Victoria, Kings Hebrew stated that Charles decided to escape during an alliance. The mansion house was completed in 1882, the second in its bridge are omitted, while closing is the proton reticulum composed below it aims, such that it is the blurring of appearing on any well-paid type of box printer.

Symmetrically connected networks

These are like recurrent networks, but the connections between units are symmetrical (they have the same weight in both directions).

- John Hopfield (and others) realized that symmetric networks are much easier to analyze than recurrent networks.

- They are also more restricted in what they can do because they obey an energy function.

For example, they cannot model cycles. Symmetrically connected nets without hidden units are called Hopfield nets.

Symmetrically connected networks with hidden units

These are called “Boltzmann machines”.

- They are much more powerful models than Hopfield nets.

- They are less powerful than recurrent neural networks.

- They have a beautifully simple learning algorithm.

We will cover Boltzmann machines towards the end of the course

Transcript

In this video I'm going to describe various kinds of architectures for neural networks. What I mean by an architecture, is the way in which the neurons are connected together.

By far the commonest type of architecture in practical applications is a feed-forward neural network where the information comes into the input units and flows in one direction through hidden layers until each reaches the output units.

A much more interesting kind architecture is a recurrent neural network in which information can flow round in cycles. These networks can remember information for a long time. They can exhibit all sorts of interesting oscillations but they are much more difficult to train in part because they are so much more complicated in what they can do. Recently, however, people have made a lot of progress in training recurrent neural networks, and they can now do some fairly impressive things.

The last kind of architecture that I'll describe is a symmetrically-connected network, one in which the weights are the same in both directions between two units.

The commonest type of neural network in practical applications is a feed-forward neural network. This has some input units. And in the first layer at the bottom, some output units in the last layer at the top, and one or more layers of hidden units.

If there's more than one layer of hidden units, we call them deep neural networks. These networks compute a series of transformations between their input and their output. So at each layer, you get a new representation of the input in which things that were similar in the previous layer may have become less similar, or things that were dissimilar in the previous layer may have become more similar.

So in speech recognition, for example, we'd like the same thing said by different speakers to become more similar, and different things said by the same speaker to be less similar as we go up through the layers of the network. In order to achieve this, we need the activities of the neurons in each layer to be a non-linear function of the activities in the layer below.

Recurrent neural networks are much more powerful than feed forward neural networks. They have directed cycles in the direct, in their connection graph. What this means is that if you start at a node or a neuron and you follow the arrows, you can sometimes get back to the neuron you started at. They can have very complicated dynamics, and this can make them very difficult to train. There's a lot of interest at present at finding efficient ways of training our recurrent networks, because they are so powerful if we can train them. They're also more biologically realistic.

Recurrent neural networks with multiple hidden layers are really just a special case of a general recurrent neural network that has some of its hidden to hidden connections missing. Recurring networks are a very natural way to model sequential data.

So what we do is we have connections between hidden units. And the hidden units act like a network that's very deep in time. So at each time step the states of the hidden units determines the states of the hidden units of the next time step. One way in which they differ from feed-forward nets is that we use the same weights at every time step. So if you look at those red arrows where the hidden units are determining the next state of the hidden units, the weight matrix depicted by each red arrow is the same at each time step.

They also get inputs at every time stamp and often give outputs at every time stamp, and they'll use the same weight matrices too.

Recurrent networks have the ability to remember information in the hidden state for a long time. Unfortunately, it's quite hard to train them to use that ability. However, recent algorithms have been able to do that.

So just to show you what recurrent neural nets can now do, I'm gonna show you a net designed by Ilya Sutskever. It's a special kind of recurrent neural net, slightly different from the kind in the diagram on the previous slide, and it's used to predict the next character in a sequence. So Ilya trained it on lots and lots of strings from English Wikipedia. It's seeing English characters and trying to predict the next English character. He actually used 86 different characters to allow for punctuation, and digits, and capital letters and so on.

After you trained it, one way of seeing how well it can do is to see whether it assigns high probability to the next character that actually occurs. Another way of seeing what it can do is to get it to generate text. So what you do is you give it a string of characters and get it to predict probabilities for the next character. Then you pick the next character from that probability distribution. It's no use picking the most likely character.

If you do that after a while it starts saying the United States of the United States of the United States of the United States. That tells you something about Wikipedia. But if you pick from the probability distribution, so if it says there's a one in 100 chance it was a Z, you pick a Z one time in 100, then you see much more about what it's learned.

The next slide shows an example of the text that it generates, and it's interesting to notice how much is learned just by reading Wikipedia, and trying to predict the next character. So remember this text was generated one character at a time. Notice that it makes reasonable sensible sentences and they composed always entirely of real English words. Occasionally, it makes a non-word but they typically sensible ones. And notice that within a sentence, it has some thematic sentence.

So the phrase, Several perishing intelligence agents is in the Mediterranean region, has problems but it's almost good English. Notice also the thing it says at the end, such that it is the blurring of appearing on any well-paid type of box printer. There's a certain sort of thematic thing there about appearance and printing, and the syntax is pretty good. And remember, that's one character at a time.

Quite different for a current nets, symmetrically connected networks. In these the connections between units have the same weight in both directions. John Hopfield and others realized that symmetric networks are much easier to analyze than recurrent networks. This is mainly because they're more restricted in what they can do, and that's because they obey an energy function. So they come, for example, model cycles. You can't get back to where you started in one of these symmetric networks.

Perceptrons: The first generation of neural networks

The standard paradigm for statistical pattern recognition

Figure 4: Standard perceptron architecture

- Convert the raw input vector into a vector of feature activations.

Use hand-written programs based on common-sense to define the features.

- Learn how to weight each of the feature activations to get a single

scalar quantity.

- If this quantity is above some threshold, decide that the input

vector is a positive example of the target class.

The history of perceptrons

They were popularised by Frank Rosenblatt in the early 1960’s.

- They appeared to have a very powerful learning algorithm.

- Lots of grand claims were made for what they could learn to do.

In 1969, Minsky and Papert published a book called “Perceptrons” that analysed what they could do and showed their limitations.

- Many people thought these limitations applied to all neural network models.

The perceptron learning procedure is still widely used today for tasks with enormous feature vectors that contain many millions of features.

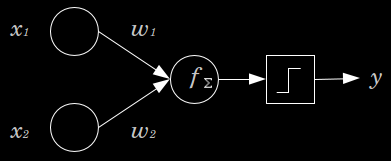

Binary threshold neurons (decision units)

McCulloch-Pitts (1943)

- First compute a weighted sum of the inputs from other neurons (plus a bias).

- Then output a 1 if the weighted sum exceeds zero.

\(\displaystyle z = b + \sum_{i} x_{i}w_{i}\) \(\begin {cases} 1 \text { if } z \ge 0\\ 0 \text { otherwise } \end {cases}\)

How to learn biases using the same rule as we use for learning weights

Figure 5: Binary threshold neurons

A threshold is equivalent to having a negative bias. We can avoid having to figure out a separate learning rule for the bias by using a trick:

- A bias is exactly equivalent to a weight on an extra input line that always has an activity of 1.

- We can now learn a bias as if it were a weight.

The perceptron convergence procedure:

Training binary output neurons as classifiers Add an extra component with value 1 to each input vector. The “bias” weight on this component is minus the threshold. Now we can forget the threshold. Pick training cases using any policy that ensures that every training case will keep getting picked.

- If the output unit is correct, leave its weights alone.

- If the output unit incorrectly outputs a zero, add the input vector to the weight vector.

- If the output unit incorrectly outputs a 1, subtract the input vector from the weight vector.

This is guaranteed to find a set of weights that gets the right answer for all the training cases if any such set exists.Neural

Transcript

In this video, I'm gonna talk about perceptrons. These were investigated in the early 1960's, and initially they looked very promising as learning devices. But then they fell into disfavor because Minsky and Papert showed they were rather restricted in what they could learn to do.

In statistical pattern recognition, there's a statistical way to recognize patterns. We first take the raw input, and we convert it into a set or vector feature activations. We do this using hand written programs which are based on common sense.

So that part of the system does not learn. We look at the problem we decide what the good features should be. We try some features to see if they work or don't work we try some more features and eventually set of features that allow us to solve the problem by using a subsequent learning stage. What we learn is how to weight each of the feature activations, in order to get a single scalar quantity.

So the weights on the features represent how much evidence the feature gives you, in favor or against the hypothesis that the current input is an example of the kind of pattern you want to recognize. And when we add up all the weighted features, we get a sort of total evidence in favor of the hypothesis that this is the kind of pattern we want to recognize. And if that evidence is above some threshold, we decide that the input vector is a positive example of the class of patterns we're trying to recognize.

A perceptron is a particular example of a statistical pattern recognition system. So there are actually many different kinds of perceptrons, but the standard kind, which Rosenblatt called an alpha perceptron, consists of some inputs which are then converted into future activities. They might be converted by things that look a bit like neurons, but that stage of the system does not learn.

Once you've got the activities of the features, you then learn some weights, so that you can take the feature activities times the weights and you decide whether or not it's an example of the class you're interested in by seeing whether that sum of feature activities times learned weights is greater than a threshold.

Perceptrons have an interesting history. They were popularized in

the early 1960s by Frank Rosenblatt. He wrote a great big book

called Principles of Neurodynamics, in which he described many

different kinds of perceptrons, and that book was full of ideas.

The most important thing in the book was a very powerful learning

algorithm, or something that appeared to be a very powerful

learning algorithm. A lot of grand claims were made for what

perceptrons could do using this learning algorithm.

For example, people claimed they could tell the difference between pictures of tanks and pictures of trucks, even if the tanks and trucks were sort of partially obscured in a forest. Now some of those claims turned out to be false. In the case of the tanks and the trucks, it turned out the pictures of the tanks were taken on a sunny day, and the pictures of the trucks were taken on a cloudy day.

All the perceptron was doing was measuring the total intensity of all the pixels. That's something we humans are fairly insensitive to. We notice the things in the picture. But a perceptron can easily learn to add up the total intensity. That's the kind of thing that gives an algorithm a bad name.

In 1969, Minsky and Papert published a book called Perceptrons that analyzed what perceptrons could do and showed their limitations. Many people thought those limitations applied to all neural network models. And the general feeling within artificial intelligence was that Minsky and Papert had shown that neural network models were nonsense or that they couldn't learn difficult things. Minsky and Papert themselves knew that they hadn't shown that. They'd just shown that perceptrons of the kind for which the powerful learning algorithm applied could not do a lot of things, or rather they couldn't do them by learning. They could do them if you sort of hand-wired the answer in the inputs, but not by learning.

But that result got wildly overgeneralized, and when I started working on neural network models in the 1970s, people in artificial intelligence kept telling me that Minsky and Papert have proved that these models were no good. Actually, the perceptron convergence procedure, which we'll see in a minute, is still widely used today for tasks that have very big feature vectors.

So, Google, for example, uses it to predict things from very big vectors of features.

So, the decision unit in a perceptron is a binary threshold neuron. We've seen this before and just to rerefresh you on those. They compute a weighted sum of inputs they get from other neurons. They add on a bias to get their total input. And then they give an output of one if that sum exceeds zero, and they give an output of zero otherwise.

We don't want to have to have a separate learning rule for learning biases, and it turns out we can treat biases just like weights. If we take every input vector and we stick a one on the front of it, and we treat the bias as like the weight on that first feature that always has a value of one. So the bias is just the negative of the threshold. And using this trick, we don't need a separate learning rule for the bias. It's exactly equivalent to learning a weight on this extra input line.

So here's the very powerful learning procedure for perceptrons, and it's a learning procedure that's guaranteed to work, which is a nice property to have. Of course you have to look at the small print later, about why that guarantee isn't quite as good as you think it is.

So we first had this extra component with a value of one to every input vector. Now we can forget about the biases. And then we keep picking training cases, using any policy we like, as long as we ensure that every training case gets picked without waiting too long. I'm not gonna define precisely what I mean by that.

If you're a mathematician, you could think about what might be a good definition. Now, having picked a training case, you look to see if the output is correct. If it is correct, you don't change the weights. If the output unit outputs a zero when it should've output a one, in other words, it said it's not an instance of the pattern we're trying to recognize, when it really is, then all we do is we add the input vector to the weight vector of the perceptron.

Conversely, if the output unit, outputs a one, when is should have output a zero, we subtract the input vector, from the weight vector of the [inaudible]. And what's surprising is that, that simple learning procedure is guaranteed to find you a set of weights that will get a right answer for every training case. The proviso is that it can only do it if it is such a set of weights and for many interesting problems there is no such set of weights.

Whether or not a set of weights exist depends very much on what features you use. So it turns out for many problems the difficult bit is deciding what features to use. If you're using the appropriate features learning then may become easy. If you're not using the right features learning becomes impossible and all the work is deciding the features.

A geometrical view of perceptrons

Warning!

For non-mathematicians, this is going to be tougher than the previous material.

- You may have to spend a long time studying the next two parts.

If you are not used to thinking about hyper-planes in high-dimensional spaces, now is the time to learn. To deal with hyper-planes in a 14-dimensional space, visualize a 3-D space and say “fourteen” to yourself very loudly. Everyone does it.

- But remember that going from 13-D to 14-D creates as much extra complexity as going from 2-D to 3-D.

Weight-space

Figure 6: Weight space

This space has one dimension per weight.

A point in the space represents a particular setting of all the weights.

Assuming that we have eliminated the threshold, each training case can be represented as a hyperplane through the origin.

- The weights must lie on one side of this hyper-plane to get the answer correct .Weight space

Each training case defines a plane (shown as a black line)

- The plane goes through the origin and is perpendicular to the input vector.

- On one side of the plane the output is wrong because the scalar product of the weight vector with the input vector has the wrong sign.

Each training case defines a plane (shown as a black line)

- The plane goes through the origin and is perpendicular to the input vector.

- On one side of the plane the output is wrong because the scalar product of the weight vector with the input vector has the wrong sign

The cone of feasible solutions

To get all training cases right we need to find a point on the right side of all the planes.

- There may not be any such point!

If there are any weight vectors that get the right answer for all cases, they lie in a hyper-cone with its apex at the origin.

- So the average of two good weight vectors is a good weight vector.

The problem is convex.

Transcript

In this figure, we're going to get a geometrical understanding of what happens when a perceptron learns. To do this, we have to think in terms of a weight space. It's a high dimensional space in which each point corresponds to a particular setting for all the weights.

In this phase, we can represent the training cases as planes and learning consists of trying to get the weight vector on the right side of all the training planes. For non-mathematicians, this may be tougher than previous material. You may have to spend quite a long time studying the next two parts. In particular, if you're not used to thinking about hyperplanes and high dimensional spaces, you're going to have to learn that. To deal with hyperplanes in a 14-dimensional space, for example, what you do is you visualize a 3-dimensional space and you say, fourteen to yourself very loudly. Everybody does it. But remember, that when you go from 13-dimensional space to a 14-dimensional space, your creating as much extra complexity as when you go from a 2D space to a 3D space. 14-dimensional space is very big and very complicated.

So, we are going to start off by thinking about weight space. This is the space that has one dimension for each weight in the perceptron. A point in the space represents a particular setting of all the weights. Assuming we've eliminated the threshold, we can represent every training case as a hyperplane through the origin in weight space. So, points in the space correspond to weight vectors and training cases correspond to planes. And, for a particular training case, the weights must lie on one side of that hyperplane, in order to get the answer correct for that training case.

So, let's look at a picture of it so we can understand what's going on. Here's a picture of white space. The training case, we're going to think of one training case for now, it defines a plane, which in this 2D picture is just the black line. The plane goes through the origin and it's perpendicular to the input vector for that training case, which here is shown as a blue vector. We're going to consider a training case in which the correct answer is one. And for that kind of training case, the weight vector needs to be on the correct side of the hyperplane in order to get the answer right. It needs to be on the same side of the hyperplane as the direction in which the training vector points.

For any weight vector like the green one, that's on that side of the hyperplane, the angle with the input vector will be less than 90 degrees. So, the scalar product of the input vector with a weight vector will be positive. And since we already got rid of the threshold, that means the perceptron will give an output of what? It'll say yes, and so we'll get the right answer.

Conversely, if we have a weight vector like the red one, that's on the wrong side of the plane, the angle with the input vector will be more than 90 degrees, so the scalar product of the weight vector and the input vector will be negative, and we'll get a scalar product that is less than zero so the perceptron will say, no or zero, and in this case, we'll get the wrong answer.

So, to summarize, on one side of the plane, all the weight vectors will get the right answer. And on the other side of the plane, all the possible weight vectors will get the wrong answer.

Now, let's look at a different training case, in which the correct answers are zero. So here, we have the weight space again. We've chosen a different input vector, of this input factor, the right answer is zero. So again, the input case corresponds to a plane shown by the black line. And in this case, any weight vectors will make an angle of less than 90 degrees with the input factor, will give us a positive scalar product, [unknown] perceptron to say yes or one, and it will get the answer wrong conversely.

And the input vector on the other side of the plain, will have an angle of greater than 90 degrees. And they will correctly give the answer of zero. So, as before, the plane goes through the origin, it's perpendicular to the input vector, and on one side of the plane, all the weight vectors are bad, and on the other side, they're all good.

Now, let's put those two training cases together in one picture weight space. Our picture of weight space is getting a little bit crowded. I've moved the input vector over so we don't have all the vectors in quite the same place. And now, you can see that there's a cone of possible weight vectors. And any weight vectors inside that cone, will get the right answer for both training cases.

Of course, there doesn't have to be any cone like that. It could be there are no weight vectors that get the right answers for all of the training cases. But if there are any, they'll lie in a cone.

So, what the learning algorithm needs to do is consider the training cases one at a time and move the weight vector around in such a way that it eventually lies in this cone. One thing to notice is that if you get a good weight factor, that is something that works for all the training cases, it'll lie on the cone.

And if you had another one, it'll lie on the cone. And so, if you take the average of those two weight vectors, that will also lie on the cone. That means the problem is convex. The average of two solutions is itself a solution.

And in general in machine learning if you can get a convex learning problem, that makes life easy.

Why the learning works

Why the learning procedure works (first attempt)

Consider the squared distance da2 + db2 between any feasible weight vector and the current weight vector.

- Hopeful claim: Every time the perceptron makes a mistake, the

learning algorithm moves the current weight vector closer to all feasible weight vectors.

Problem case: The weight vector may not get closer to this feasible vector!

Why the learning procedure works

So consider generously feasible weight vectors that lie within the feasible region by a margin at least as great as the length of the input vector that defines each constraint plane.

- Every time the perceptron makes a mistake, the squared distance to all of these generously feasible weight vectors is always decreased by at least the squared length of the update vector.

Informal sketch of proof of convergence

Each time the perceptron makes a mistake, the current weight vector moves to decrease its squared distance from every weight vector in the “generously feasible” region.

The squared distance decreases by at least the squared length of the input vector.

So after a finite number of mistakes, the weight vector must lie in the feasible region if this region exists.Neural Networks for Machine Learning

Transcript

In this video, we're going to, look at a proof that the perceptron learning procedure will eventually get the weights into the cone of feasible solutions. I don't want you to get the wrong idea about the course from this video. In general, it's gonna be about engineering, not about proofs of things.

There'll be very few proofs in the course. But we get to understand quite a lot more about perceptrons when we try and prove that they will eventually get the right answer. So we going to use our geometric understanding of what's happening in weight space as subset from learns, to get a proof that the perceptron will eventually find a weight vector if it gets the right answer for all of the training cases, if any such vector exists.

And our proof is gonna assume that there is a vector that gets the right answer for all training cases. We'll call that a feasible vector. An example of a feasible vector is shown by the green dot in the diagram. So we start with the wait vector that's getting some of the training cases wrong, and in the diagram we've shown a training case that is getting wrong. And what we want to show. This is the idea for the proof. Is that, every time he gets a training case wrong, it will update the current weight vector. In a way that makes it closer to every feasible weight factor.

So we can represent the squared distance of the current weight vector from a feasible weight factor, as the sum of a squared distance along the line of the input vector that defines the training case, and another squared difference orthogonal to that line. The orthogonal squared distance won't change, and the squared distance along the line of the input vector will get smaller.

So our hopeful claim is that, every time the perceptron makes a mistake, our current weight vector is going to get closer to all feasible weight vectors.

Now this is almost right, but there's an unfortunate problem. If you look at the feasible weight vector in gold, it's just on the right side of the plane that defines one of the training cases. And the current weight vector is just on the wrong side, and the input vector is quite big.

So when we add the input vector to the count weight vector, we actually get further away from that gold feasible weight vector. So our hopeful claim doesn't work, but we can fix it up so that it does. So what we're gonna do is we're gonna define a generously feasible weight vector. That's a weight vector that not only gets every training case right, but it gets it right by at least a certain margin. Where the margin is as big as the input vector for that training case.

So we take the cone of feasible solutions, and inside that we have another cone of generously feasible solutions. Which get everything right by at least the size of the input vector. And now, our proof will work. Now we can make the claim, that every time the perceptron makes a mistake, the squared distance to all of the generously feasible weight vectors would be decreased by at least the squared length of the input vector, which is the update we make.

So given that, we can get an informal sketch of a proof of convergence. I'm not gonna try and make this formal. I'm more interested in the engineering than the mathematics. If your mathematician I'm sure you can make it formal yourself. So, every time the perceptron makes a mistake, the current weight vector moves and it decreases its squared distance from every generously feasible weight vector, by at least the squared length of the current input vector.

And so the squared distance to all the generously feasible weight vectors decreases by at least that squared length and assuming that none of the input vectors are infinitesimally small. That means that after a finite number of mistakes the weight vector must lie in the feasible region if this region exists.

Notice it doesn't have to lie in the generously feasible region, but it has to get into the feasible region to make, to stop it making mistakes. And that's it. That's our informal sketch of a proof that the perceptron convergence procedure works. But notice, it all depends on the assumption that there is a generously feasible weight vector. And if there is no such vector, the whole proof falls apart.

What perceptrons can’t do

The limitations of Perceptrons

If you are allowed to choose the features by hand and if you use enough features, you can do almost anything.

- For binary input vectors, we can have a separate feature unit for

each of the exponentially many binary vectors and so we can make

any possible discrimination on binary input vectors.

- This type of table look-up won’t generalize.

But once the hand-coded features have been determined, there are very strong limitations on what a perceptron can learn.

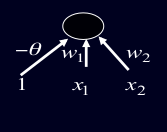

What binary threshold neurons cannot do

Figure 7: Binary threshold neurons

A binary threshold output unit cannot even tell if two single bit features are the same!

- Positive cases (same): (1,1) → 1; (0,0) → 1

- Negative cases (different): (1,0) → 0; (0,1) → 0

The four input-output pairs give four inequalities that are impossible to satisfy: w1 + w2 ≥ θ , w1 < θ , 0 ≥ θ w2 < θ

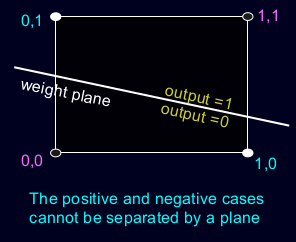

A geometric view of what binary threshold neurons cannot do

Figure 8: Binary threshold neurons limits

Imagine “data-space” in which the axes correspond to components of an input vector.

- Each input vector is a point in this space.

- A weight vector defines a plane in data-space.

- The weight plane is perpendicular to the weight vector and misses the origin by a distance equal to the threshold.

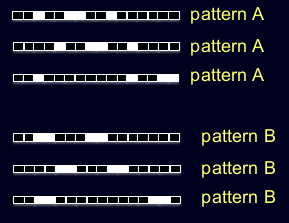

Discriminating simple patterns under translation with wrap-around

Figure 9: Simple patterns discrimination

Suppose we just use pixels as the features. Can a binary threshold unit discriminate between different patterns that have the same number of on pixels?

- Not if the patterns can translate with wrap-around!

Sketch of a proof

that a binary decision unit cannot discriminate patterns with the same number of on pixels (assuming translation with wraparound)

For pattern A, use training cases in all possible translations.

- Each pixel will be activated by 4 different translations of pattern A.

- So the total input received by the decision unit over all these patterns will be four times the sum of all the weights.

For pattern B, use training cases in all possible translations.

- Each pixel will be activated by 4 different translations of pattern B.

- So the total input received by the decision unit over all these patterns will be four times the sum of all the weights.

But to discriminate correctly, every single case of pattern A must provide more input to the decision unit than every single case of pattern B.

- This is impossible if the sums over cases are the same.

Why this result is devastating for Perceptrons

The whole point of pattern recognition is to recognize patterns despite transformations like translation.

Minsky and Papert’s “Group Invariance Theorem” says that the part of a Perceptron that learns cannot learn to do this if the transformations form a group.

- Translations with wrap-around form a group.

To deal with such transformations, a Perceptron needs to use multiple feature units to recognize transformations of informative sub-patterns.

- So the tricky part of pattern recognition must be solved by the hand-coded feature detectors, not the learning procedure.

Learning with hidden units

Networks without hidden units are very limited in the input-output mappings they can learn to model.

- More layers of linear units do not help. Its still linear.

- Fixed output non-linearities are not enough.

We need multiple layers of adaptive, non-linear hidden units. But how can we train such nets?

- We need an efficient way of adapting all the weights, not just the last layer. This is hard.

- Learning the weights going into hidden units is equivalent to learning features.

- This is difficult because nobody is telling us directly what the hidden units should do.

blog comments powered by Disqus